AI medical transcription is now mainstream in clinics that want faster documentation, less after-hours charting, and cleaner notes.

But one question comes up before any feature list or pricing discussion:

Question: Is AI transcription safe?

Answer: It can be—when the tool is designed for healthcare and your clinic implements it with the right privacy, security, and workflow controls.

Вisclaimer

This article is for general informational purposes only and does not constitute legal, compliance, or medical advice. Privacy and security obligations vary by jurisdiction (for example, HIPAA in the U.S., PHIPA in Ontario, GDPR in the EU) and by your role (clinic, provider, vendor). Always consult your organization’s privacy officer, legal counsel, or compliance advisor before adopting any AI medical transcription workflow.

Quick answer (what most clinicians want to know)

AI transcription is typically safe enough for clinical use when all of the following are true:

- The vendor is built for healthcare workflows and handling sensitive data (not a generic consumer transcription app).

- Your data is protected with strong access controls (MFA / role-based access), encryption, and audit logging.

- You have clear rules for who can access transcripts, how long they’re kept, and how they’re deleted.

- Transcription is treated as draft documentation: the clinician reviews and finalizes the note.

- Your practice confirms that vendor contracts and policies match your jurisdiction and internal requirements.

If those controls are missing, AI transcription can be high-risk, especially when sensitive details are captured in audio, transcripts, and generated notes.

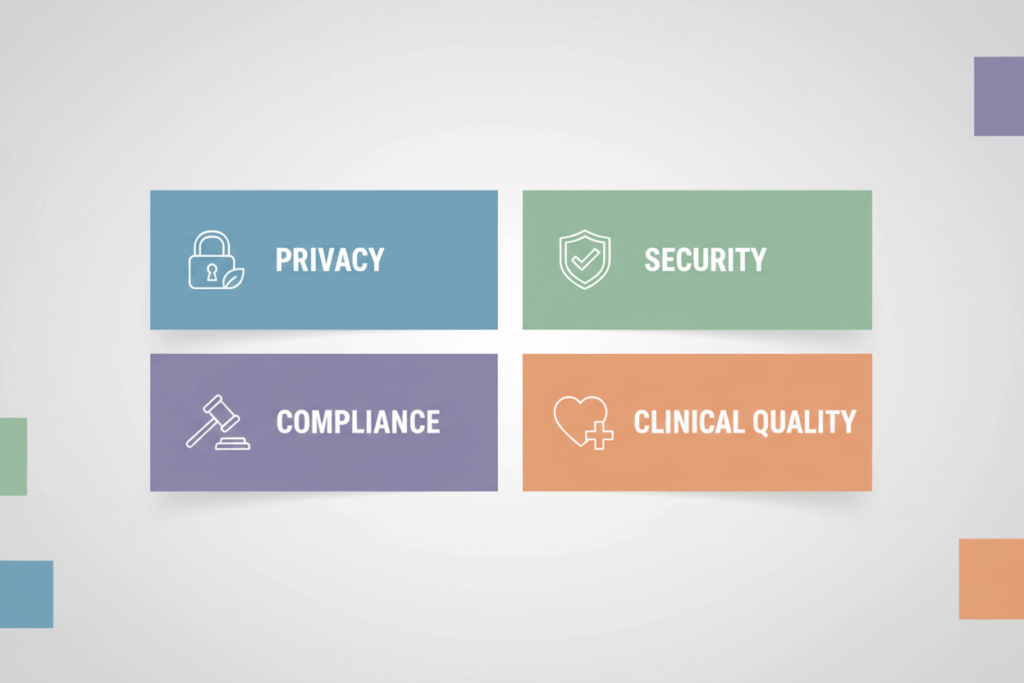

What “safe” actually means in AI medical transcription

When clinicians ask whether AI medical transcription is safe, they’re usually asking about three things:

- Privacy — Is patient information kept confidential and shared appropriately?

- Security — Can unauthorized people access audio/transcripts/notes?

- Compliance readiness — Does the workflow align with the rules you’re accountable for?

A fourth element matters in medicine:

- Clinical safety — Is the output accurate enough to support documentation without introducing harmful errors?

A strong AI transcription setup addresses all four.

The real risks (and where clinics get burned)

Most “AI transcription horror stories” aren’t about AI being inherently unsafe. They happen when the workflow isn’t healthcare-grade.

Common failure points:

- Using consumer tools that weren’t designed for protected health information.

- Weak account security (shared logins, no MFA, over-permissive access).

- Unclear data handling (How long is audio stored? Is it used for training? Who are subprocessors?).

- No retention/deletion rules, leading to unnecessary exposure.

- Poor device hygiene (recordings stored unencrypted on personal devices, unmanaged laptops).

- No patient-facing transparency, especially if local norms or policies expect notice/consent.

The good news: most of these are preventable with a clear checklist.

The 7 pillars of safer AI medical transcription

1) Data minimization and purpose limits

Transcribe only what you need for documentation.

Practical examples:

- Avoid recording unnecessary small talk or non-clinical topics.

- Use templates that encourage clinically relevant structure (SOAP, consult note, referral).

- Prefer systems that support clear separation between raw transcript and final note.

2) Encryption in transit and at rest

For healthcare transcription, encryption shouldn’t be optional.

What you want to see:

- Encrypted connections for data moving between the device and the server (in transit).

- Encrypted storage for audio/transcripts/notes (at rest).

3) Strong identity and access controls

Most breaches are access problems, not “AI problems.”

Baseline controls:

- Multi-factor authentication (MFA) for every account.

- Role-based access control (RBAC) so staff only see what they need.

- Session timeouts, login alerts, and straightforward account offboarding.

4) Audit logs and monitoring

A healthcare-ready system should provide an audit trail.

At minimum:

- Who accessed a record

- When they accessed it

- What actions were taken (viewed, exported, deleted)

Auditability supports accountability and incident response.

5) Clear retention and deletion policies

Keeping data “forever” increases risk without improving care.

Best practice:

- Define retention for audio, raw transcripts, and final notes separately.

- Make deletion/export processes explicit.

- Ensure retention aligns with your clinic’s policies and jurisdiction.

6) Vendor risk management (contracts and subprocessors)

This is where many implementations fail.

Before you adopt AI medical transcription, confirm:

- Where data is processed/stored (and whether that fits your requirements).

- Whether data is shared with subprocessors, and under what controls.

- What contractual terms apply (privacy addendum, data processing agreement, business associate terms where relevant).

7) Clinical quality controls (human review is mandatory)

AI transcription should be treated like a fast draft—not the final chart.

Operationally:

- Clinician reviews the note for accuracy, omissions, and misheard terms.

- Use structured prompts/templates to reduce ambiguity.

- Document a quick “sign-off” routine so nothing is filed unreviewed.

A clinic-ready checklist: how to evaluate an AI medical transcription tool

Use the questions below when assessing any vendor (including Dorascribe):

- Does the tool explicitly support healthcare documentation workflows (structured notes, template outputs, clinician review)?

- What is the default retention for audio and transcripts—and can you change it?

- Is data used to train models? If yes, can you opt out?

- What security controls are standard (MFA, RBAC, encryption, audit logs)?

- Can you control access by role (physician, nurse, admin, student)?

- What happens if staff leave—can you immediately revoke access?

- How is data exported (copy/paste, PDF, integration), and what safeguards exist around export?

- Where is data processed/stored, and who are the subprocessors?

- What incident response commitments exist (breach notification timelines, support)?

- What does the workflow look like on mobile (device security, browser vs app, session controls)?

If a vendor cannot answer these clearly, treat that as a risk signal.

How Dorascribe approaches privacy and security

Dorascribe is built for clinical documentation workflows where privacy and security are not “nice-to-haves,” but operational requirements.

For a deeper overview of the privacy and security concepts that matter in automated scribing, see Dorascribe’s guide on ensuring patient privacy and data security in healthcare documentation.

If you want a plain-language overview of safeguards and what “reasonable protection” looks like in a Canadian privacy context, review the Office of the Privacy Commissioner of Canada’s guidance on safeguarding personal information.

FAQ: “Is AI transcription safe?” in real clinic terms

Is AI medical transcription safe for patient visits?

It can be, if you use a healthcare-ready tool and apply the controls above (access control, encryption, audit logs, retention rules, and clinician review). The risk usually comes from weak implementation—not the concept of AI transcription itself.

Is AI transcription automatically HIPAA / PHIPA / GDPR compliant?

No tool is “automatically compliant” in every context. Compliance depends on how the tool is used, your role and obligations, and the vendor’s contractual and technical safeguards. Treat compliance as a workflow + vendor + policy question.

Can I use a generic voice-to-text app to transcribe clinical encounters?

That is generally not recommended for protected health information unless you have clear confirmation it meets your organization’s privacy/security requirements and your jurisdiction’s rules. Healthcare transcription should use tools designed for clinical data handling.

Does AI transcription replace a clinician’s documentation responsibility?

No. Clinicians remain responsible for the accuracy and completeness of the medical record. AI transcription is best treated as a speed layer that reduces typing, while the clinician still reviews and finalizes.

What’s the safest way to roll this out in a small practice?

Start narrow:

- Pilot with a small number of users

- Use strict roles and MFA

- Set conservative retention rules

- Establish a simple sign-off checklist

- Document patient-facing communication expectations

Then expand once the workflow is stable.

Bottom line

So, is AI transcription safe?

Yes, when you choose a healthcare-ready AI medical transcription tool and implement it with privacy, security, and review controls that match the sensitivity of clinical data.

If you want to explore AI transcription in a clinician-first workflow, Dorascribe is designed to help teams document faster while keeping privacy and security considerations front and center.